To conduct continuous learning on resource-constrainted devices, efficient learning/transfer algorithms are needed. In this page, we summarize recent representative works and discuss their limitations.

Table of contents

Efficient learning

- [arXiv 2024.01] The Unreasonable Effectiveness of Easy Training Data for Hard Tasks

- [arXiv 2024.03] Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLM

- [ICLR’22] Auto-scaling Vision Transformers without Training

- [ICLR’22] Fast Model Editing at Scale

- [ECCV’22] TinyViT: Fast Pretraining Distillation for Small Vision Transformers

- [ECCV’22] MaxViT: Multi-axis Vision Transformer

- [CVPR’21] Fast and Accurate Model Scaling

- [CVPR’23] FlexiViT: One Model for All Patch Sizes

- [ICML’23] Fast Inference from Transformers via Speculative Decoding

- [CVPR’23] EfficientViT: Memory Efficient Vision Transformer with Cascaded Group Attention

- [arXiv 2023.11] Navigating Scaling Laws: Compute Optimality in Adaptive Model Training

- [ICCV’23] TripLe: Revisiting Pretrained Model Reuse and Progressive Learning for Efficient Vision Transformer Scaling and Sea

- [ICCV’23] FastViT: A Fast Hybrid Vision Transformer using Structural Reparameterization

- [CVPR’22] RepMLP: Re-parameterizing Convolutions into Fully-connected Layers for Image Recognition

- [CVPR’21] RepVGG: Making VGG-style ConvNets Great Again

- [ICLR’23] Re-parameterizing Your Optimizers rather than Architectures

- [Tech Blog] Model Merging: MoE, Frankenmerging, SLERP, and Task Vector Algorithms

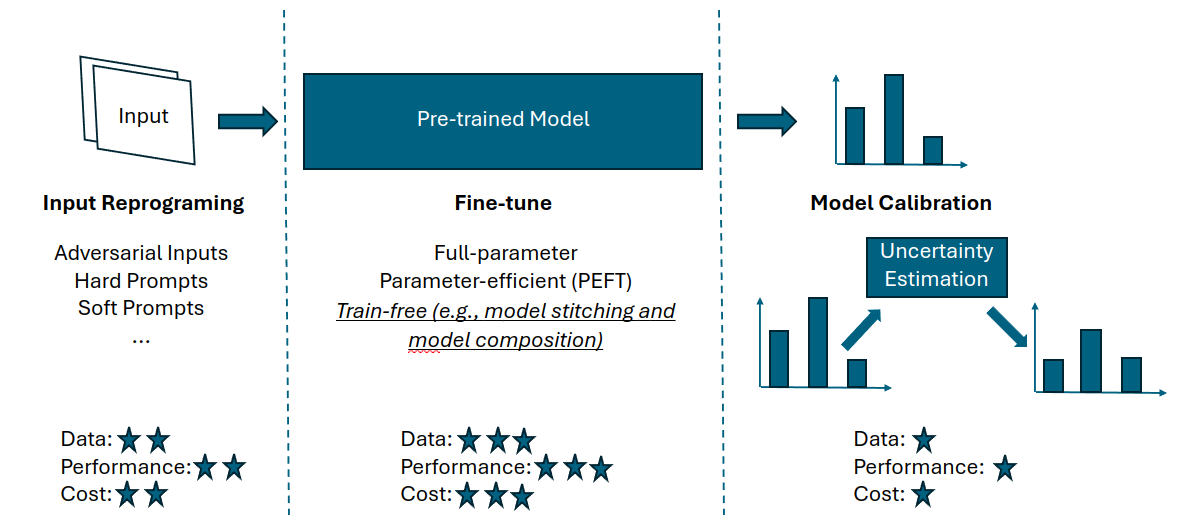

Transfer Learning

Input Reprogramming

- [ICLR’19] Adversarial Reprogramming of Neural Networks

- [ICML’20] Transfer Learning without Knowing: Reprogramming Black-box Machine Learning Models with Scarce Data and Limited Resources

- [ICML’22] Black-Box Tuning for Language-Model-as-a-Service

- [CVPR’22] Rep-Net: Efficient On-Device Learning via Feature Reprogramming

- [IJCAI’23] Black-box Prompt Tuning for Vision-Language Model as a Service

- [CVPR’23] BlackVIP: Black-Box Visual Prompting for Robust Transfer Learning

- [ICASSP’24] Efficient Black-Box Speaker Verification Model Adaptation with Reprogramming and Backend Learning

- [arXiv 2024.02] Connecting the Dots: Collaborative Fine-tuning for Black-Box Vision-Language Models

Fine-tune

- [ICML’21] Zoo-Tuning: Adaptive Transfer from a Zoo of Models

- [NeurIPS’21] Revisiting Model Stitching to Compare Neural Representations

- [ICLR’22] LoRA: Low-Rank Adaptation of Large Language Mode

- [EMNLP’22] BBTv2: Towards a Gradient-Free Future with Large Language Models

- [ICLR’23] Editing models with task arithmetic

- [CVPR’23 Best Paper Award] Visual Programming: Compositional visual reasoning without training

- [ICCV’23] A Unified Continual Learning Framework with General Parameter-Efficient Tuning

- [ICLR’24] Mixture of LoRA Experts

- [ICLR’24] Batched Low-Rank Adaptation of Foundation Models

- [arXiv 2023.11] PrivateLoRA For Efficient Privacy Preserving LLM

- [arXiv 2024.02] BitDelta: Your Fine-Tune May Only Be Worth One Bit

- [arXiv 2024.02] LoraRetriever: Input-Aware LoRA Retrieval and Composition for Mixed Tasks in the Wild

- [arXiv 2023.07] LoraHub: Efficient Cross-Task Generalization via Dynamic LoRA Composition

- [arXiv 2024.05] Trans-LoRA: towards data-free Transferable Parameter Efficient Finetuning

- [arXiv 2023.08] IncreLoRA: Incremental Parameter Allocation Method for Parameter-Efficient Fine-tuning

- [arXiv 2024.02] Evolutionary Optimization of Model Merging Recipes

Model Calibration

- [NeurIPS’21] Revisiting the Calibration of Modern Neural Networks

- [EMNLP’23] CombLM: Adapting Black-Box Language Models through Small Fine-Tuned Models

- [CVPR’24] Efficient Test-Time Adaptation of Vision-Language Models